AI Image Generators: Derivatives, Not Originals

By Gene Keenan

The rise of AI image generators like DALL-E, Midjourney, and Stable Diffusion has been heralded as a revolutionary creative force. These systems can conjure seemingly infinite visual permutations with a simple text prompt. Creatives at agencies are freaking out about the power of AI and what it could mean for their careers. But beneath the dazzling outputs lies an uncomfortable truth – these AI models are not truly creative in the most profound sense. They are derivative works built upon copying and remixing existing data.

AI image generators are, at their core, products of human ingenuity. They are trained on extensive datasets of existing images and their associated descriptions. Through intricate machine-learning algorithms, they learn to map patterns between visuals and text. When a user provides a prompt, the model, guided by its training, attempts to create a new visual that aligns with the given parameters.

This has significant implications. AI models can only perceive and generate things that are already encapsulated in their training data in some form. They cannot honestly imagine wholly new visual concepts from first principles. They cannot faithfully render their datasets without examples of highly novel or logically contradictory scenes.

Let’s take the example of creating “a wooden wagon wheel with only three spokes radiating from a center hub placed at equal distances forming thirds.” This violates the conventional model of a wagon wheel having many spokes radiating from the center. Unless the training data explicitly contained such an abnormal construct, even a highly capable AI will fumble. It may be approximated by sampling bits of different wheels and wonkily combining them. But it cannot reason from scratch about engineering an inherently new type of wheel designed for some presently inconceivable purpose. I have had many people try for hours to construct this wagon wheel unsuccessfully.

The images AI models produce are akin to intelligent kitbashing or photo bashing—taking existing pieces and recombining them in new ways, much like special effects artists do by piecing together model components. However, they cannot imagine something entirely new and coherent from the void, guided only by internal reasoning instead of recycled data.

It’s crucial to understand the limitations of current AI technologies. While they are impressive tools that can enhance human creativity, they are not a direct path to achieving unbounded artificial general intelligence.

There is immense value in having AI assistants that can remix the world’s existing knowledge in creative ways. But we should not conflate that with the capacity for authentic, unbounded conception. For now, AI image and art generators remain tools built atop the human artistic heritage – a heritage they sadly (Thankfully?) cannot truly transcend on their own.

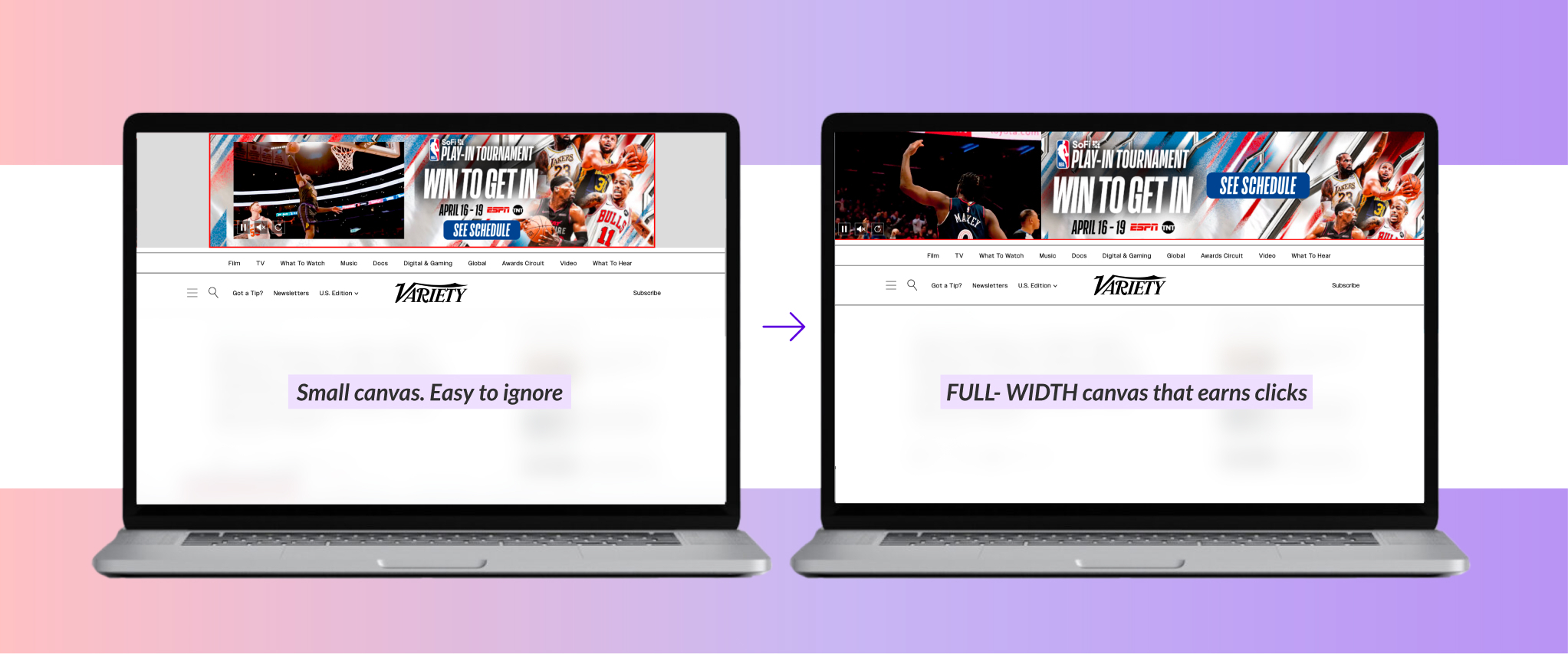

From an advertising perspective, The big AI image generators can not yet create complex, layered images with transparent backgrounds (e.g., Midjourney, DALL-E, Stable Diffusion). This makes DCO using AI a mixed bag at best. You can not change out specific elements within an animation based on a data feed (e.g., weather, location, time, etc.). The best way to think about this is that AI image generators have become another myth in generating better creatives, in the same way, the rush for data 10 years ago didn’t deliver. If everyone has the same data, there is no advertising advantage. The same goes for AI image generation.

So, for all those creatives at agencies concerned about their jobs, I suggest using AI as a tool for quick ideation and other ways to enhance your creativity. Human powered innovation is still the best in the market today. ResponsiveAds provides tools to creatives, brands, publishers and agencies to accomplish this.

Face The Music: The world will be boring if we depend on AI to regurgitate existing ideas.

Related posts